I am using Keras with Tensorflow backend for my project. In the beginning, when using Tensorflow backend, I am a bit surprised when seeing the memory usage. Although my model size is not more than 10 MB, It is still using all of my GPU memory.

After reading tensorflow documentation, I found out, that by default, TensorFlow maps nearly all of the GPU memory. The purpose is to reduce the memory fragmentation. And also, from the documentation, I know there are two different approaches that can be used to handle this situation.

The first method is limiting the memory usage by percentage. So, for example, you can limit the application just only use 20% of your GPU memory. If you are using 8GB GPU memory, the application will be using 1.4 GB. (I am using Keras, so the example will be done in Keras way)

import tensorflow as tf from keras.backend.tensorflow_backend import set_session config = tf.ConfigProto() config.gpu_options.per_process_gpu_memory_fraction = 0.2 set_session(tf.Session(config=config))

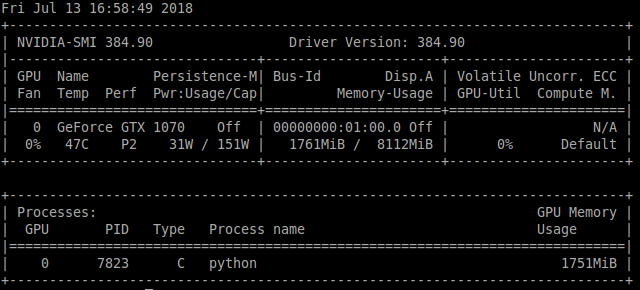

After setting the configuration, we could see the difference.

It only uses 1.6 GB of memory. But, for this configuration, we only limit by percentage, and the application still use 100% of the limitation. We cannot know how much exactly the memory usage of the application. So we will try the second method.

The second method is using allow_growth. This method will make the application allocate only as much GPU memory based on runtime allocation. So, the application will be using the GPU memory as needed.

import tensorflow as tf from keras.backend.tensorflow_backend import set_session config = tf.ConfigProto() config.gpu_options.allow_growth = True sess = tf.Session(config=config) set_session(sess)

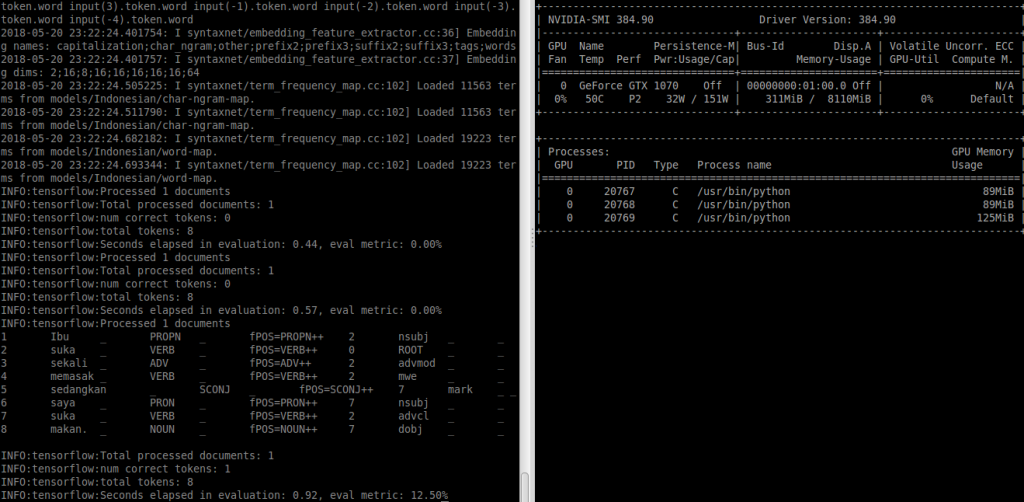

And this is the result.

So, after trying two of these methods, which method will you use for your application? 😀